Whilst it’s not uncommon to transfer data between systems by means of text files, the files themselves often turn-up in a binary format.

They may have been compressed or even encrypted before transit.

Turning them back into text so that they can be processed may be a bit of an overhead.

Not only can you end up with two copies of the data ( the binary original and the re-constituted text version), the process of conversion may be both time consuming and resource intensive.

In the case of encrypted files, persisting the unencrypted data in a file may have additional security implications.

Fortunately, it’s possible to load data from such binary formatted files into Oracle without first having to write it to a text file.

Irrespective of whether your incoming feed file is enrcypted or merely compressed, loading it into Oracle should be effortless for you after reading this.

You need not worry about any potential pitfalls because I’ve already fallen into each pit in turn, as I shall now recount.

To start with, I’ll be looking at how to use an External Table Preprocessor to load data from a compressed file.

I’ll then go through loading data that’s GPG encrypted.

Following that, we’ll take a look at why PDB_OS_CREDENTIAL might not be the help you hoped it might be when dealing with GPG decryption and how SQL*Loader can help.

Whilst I was writing this, Oracle considerately released 23c Free and made it available in a VirtualBox appliance running Oracle Linux Server 8.7, so it’d be rude not to use it for the examples that follow…

Setup

To start with I’ve created a new OS user called app_user and a group called app_os.

I’ve made this the primary group for app_user as well as adding oracle to the group.

sudo useradd -m app_user

sudo groupadd app_os

sudo usermod -g app_os app_user

sudo usermod -a -G app_os oracle

NOTE – for this change to take effect for oracle, I had to restart the VM.

Next, I’ve created some directories under the app_user home and granted appropriate permissions.

The app_user home is visible to the group :

cd $HOME ls -ld drwxr-x---. 7 app_user app_os 180 Apr 8 11:55 .

The bin directory will hold any shell scripts we need to execute.

The upload directory will hold feed files to be loaded into the database.

Note that oracle will have access to execute these through the group, but will not be able to write to them :

drwxr-x---. 2 app_user app_os 27 Apr 8 12:16 bin drwxr-x---. 2 app_user app_os 50 Apr 9 10:36 upload

Finally, we have a directory to hold any log files generated. Obviously, oracle does need write access to this directory :

drwxrwx---. 2 app_user app_os 54 Apr 8 14:09 logs

In the Database, I’ve created directory objects on each of these directories.

Note that home on Oracle Linux Server is a symbolic link to /opt/oracle/userhome, so we need to use the physical path in the Directory Object definition :

create or replace directory app_bin as '/opt/oracle/userhome/app_user/bin'

/

create or replace directory app_log as '/opt/oracle/userhome/app_user/logs'

/

create or replace directory app_upload as '/opt/oracle/userhome/app_user/upload'

/

…and granted the appropriate permissions to the HR database user :

grant read, execute on directory app_bin to hr;

grant read, write on directory app_log to hr;

grant read on directory app_upload to hr;

I’ve created a csv file containing all of the records in the employees table.

In SQLCL this is accomplished by connecting to the database as HR and running :

set sqlformat csv

spool employees.csv

select 'DATA' as row_type,

emp.*

from employees

/

spool off

I’ve also tweaked the row count at the end of the file to make it look like a trailer record you might expect to see on a feed file…

TRA,107

Coming gundun with gunzip

I’ve got a compressed version of the file in the uploads directory :

-rw-r--r--. 1 app_user app_os 3471 Apr 9 10:36 employees.csv.gz

Now I need to create a simple shell script to call from an external table preprocessor to unzip the file on the fly and send the output to STDOUT. This will then be read and uploaded into the external table automatically.

The script is called unzip_file.sh and, initially, it looks like this :

#!/usr/bin/sh

/usr/bin/gunzip -c $1

When invoked by an external table preprocessor call, the script will be passed the fully qualified path of the file specified as the location of the external table.

The permissions on the script are :

-rwxr-x---. 1 app_user app_os 198 Apr 8 13:04 unzip_file.sh

Now for the external table itself. This is called employees_zip_xt and is created in the HR schema :

create table employees_zip_xt

(

row_type varchar2(10),

employee_id number,

first_name varchar2(20),

last_name varchar2(25),

email varchar2(25),

phone_number varchar2(20),

hire_date date,

job_id varchar2(10),

salary number,

commission_pct number,

manager_id number,

department_id number

)

organization external

(

type oracle_loader

default directory app_upload

access parameters

(

records delimited by newline

logfile app_log : 'employees_zip_xt.log'

badfile app_log : 'employees_zip_xt.bad'

nodiscardfile

preprocessor app_bin : 'unzip_file.sh'

skip 1

load when row_type = 'DATA'

fields terminated by ',' optionally enclosed by '"'

missing field values are null

(

row_type char(10),

employee_id integer external(6),

first_name char(20),

last_name char(25),

email char(25),

phone_number char(20),

hire_date date "DD-MON-RR",

job_id char(10),

salary float external,

commission_pct float external,

manager_id integer external(6),

department_id integer external(4)

)

)

location('employees.csv.gz')

)

reject limit unlimited

/

The table will process the data written to STDOUT by the preprocessor script.

Hmmm, I wonder what that suspicious clump of leaves and twigs is covering…

select * from employees_zip_xt; ORA-29913: error while processing ODCIEXTTABLEFETCH routine ORA-29400: data cartridge error KUP-04095: preprocessor command /opt/oracle/userhome/app_user/bin/unzip_file.sh encountered error "/usr/bin/gunzip: line 57: /opt/oracle/product/23c/dbhomeFree/dbs/gzip: No such file or directory

Give me a hand up, will you ?

As well as the ever inscrutable ODCIEXTTABLEFETCH, we have KUP-04095 complaining that it can’t find an executable that we haven’t called.

Whilst we’ve specified the full path to the gunzip executable in our script, GNU gunzip calls gzip during execution. Because that doesn’t use a fully qualified path, it looks in the wrong place.

The solution then, is to set the PATH environment variable in our script…

#!/usr/bin/sh

# Need the PATH specified because gunzip executable is calling gzip under the covers

export PATH=/usr/bin

gunzip -c $1

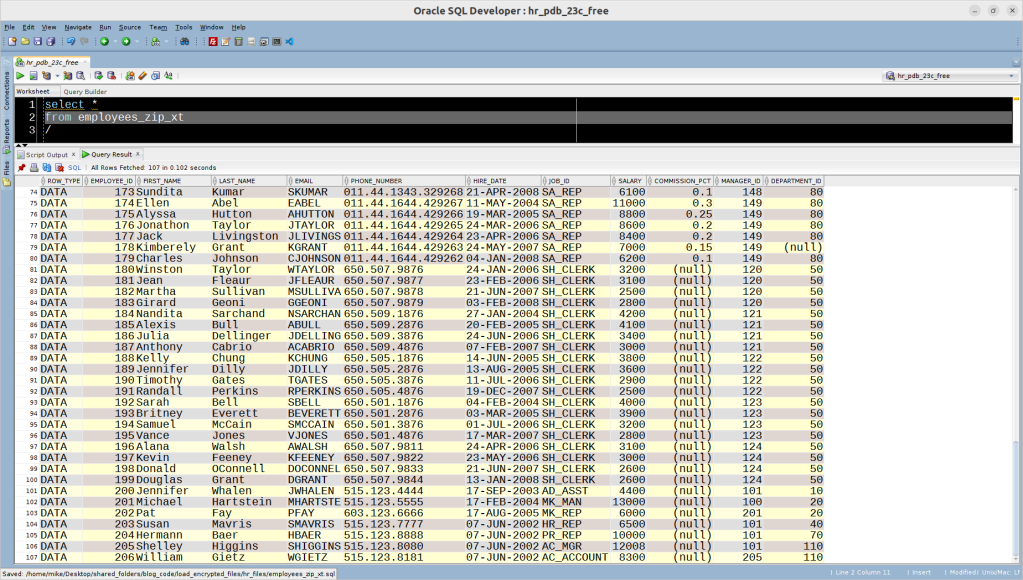

Now, when we query the table, it works as expected :

…and the uncompressed data is not persisted in a file…

ls -l emp* -rw-r--r--. 1 app_user app_os 3471 Apr 9 10:36 employees.csv.gz

Now we’ve established the concept, let’s try something a little more ambitious…

Where did I leave my keys ?

Just for a change, I’m going to start with the example that actually works as expected.

I’ve generated GPG keys for oracle and used the public key to encrypt the original csv. The encrypted file has been moved to the upload directory :

ls -l *.gpg -rw-r--r--. 1 app_user app_os 3795 Apr 9 12:31 employees.csv.gpg

Note that the passphrase has been saved in passphrase.txt in oracle‘s home directory with the following permissions :

ls -l passphrase.txt -rw-------. 1 oracle oracle 19 Apr 9 15:41 passphrase.txt

Now we need a script in our bin directory to decrypt the file (decrypt_file.sh) :

#!/usr/bin/sh

/usr/bin/gpg \

--decrypt \

--pinentry-mode loopback \

--passphrase-file /opt/oracle/userhome/oracle/passphrase.txt \

--batch $1 2>/dev/null

We have a new external table – employees_enc_xt, which may look somewhat familiar :

create table employees_enc_xt

(

row_type varchar2(10),

employee_id number,

first_name varchar2(20),

last_name varchar2(25),

email varchar2(25),

phone_number varchar2(20),

hire_date date,

job_id varchar2(10),

salary number,

commission_pct number,

manager_id number,

department_id number

)

organization external

(

type oracle_loader

default directory app_upload

access parameters

(

records delimited by newline

logfile app_log : 'employees_enc_xt.log'

badfile app_log : 'employees_enc_xt.bad'

nodiscardfile

preprocessor app_bin : 'decrypt_file.sh'

skip 1

load when row_type = 'DATA'

fields terminated by ',' optionally enclosed by '"'

missing field values are null

(

row_type char(10),

employee_id integer external(6),

first_name char(20),

last_name char(25),

email char(25),

phone_number char(20),

hire_date date "DD-MON-RR",

job_id char(10),

salary float external,

commission_pct float external,

manager_id integer external(6),

department_id integer external(4)

)

)

location('employees.csv.gpg')

)

reject limit unlimited

/

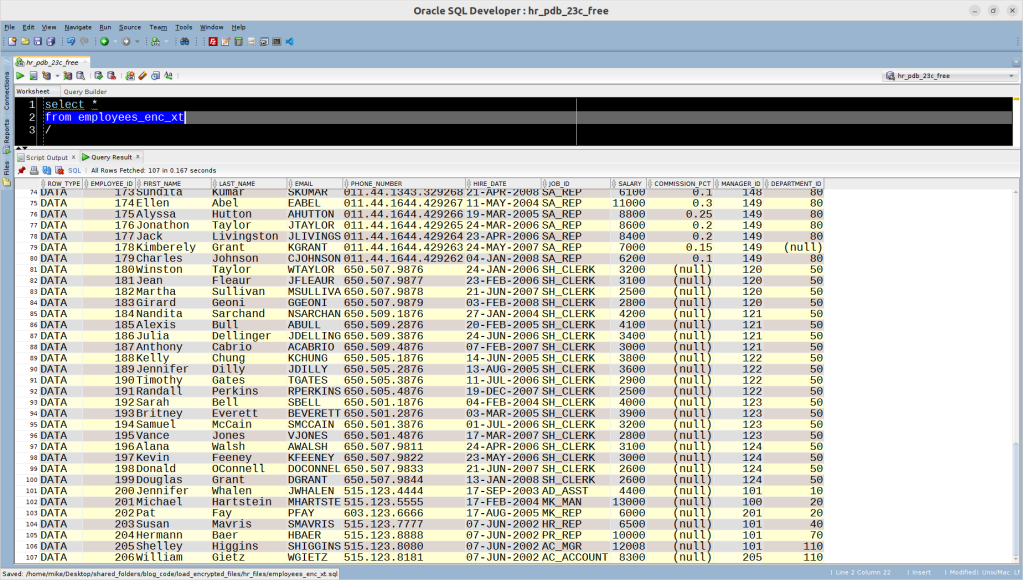

…as will the results when we query it :

When oracle doesn’t own the private GPG key

The point of a private GPG key is that it’s only accessible to the user that owns it. If that’s not oracle then we need to find a means of executing as the account which does own the key.

No biggie, the PDB_OS_CREDENTIAL parameter has that covered…oh, what have I stepped in.

PDB_OS_CREDENTIAL has been around since 12c and should allow you to specify the os user which runs when you invoke an external table preprocessor.

Coincidentally, the bug that causes this setting to be ignored has been around for a similar length of time.

I have personally confirmed this on versions 19c (splash !), 21c (squelch !) and now 23c (splat !).

I do hope that’s mud.

In these articles, Szymon Skorupinski does a marvellous job of explaining system behaviour both before and after the application of the patch to fix this issue :

If applying the patch is going to be problematic, not least because of the regression testing effort required to make sure none of your existing code breaks, then you do have an alternative…

I should be able to scrape it off once it dries

Before external tables there was SQL*Loader. It’s still supported, which is just as well in this case as we can use it to run a script as the owner of the PGP key and load the data directly into a staging table in the database.

The advantage of this approach is that you can execute it as the owner of the GPG key (although I’m still using the oracle user in this example).

To start with, we need a table to stage the data into :

create table employees_stg

(

row_type varchar2(10),

employee_id number,

first_name varchar2(20),

last_name varchar2(25 ),

email varchar2(25),

phone_number varchar2(20),

hire_date date,

job_id varchar2(10),

salary number,

commission_pct number,

manager_id number,

department_id number

)

/

Now we create a SQL*Loader control file in the bin directory on the server (load_employees.ctl) :

options(skip=1)

load data

badfile '/opt/oracle/userhome/app_user/logs/load_employees.bad'

discardfile '/opt/oracle/userhome/app_user/logs/load_employees.dis'

append

into table employees_stg

when row_type='DATA'

fields terminated by "," optionally enclosed by '"'

trailing nullcols

(

row_type char(10),

employee_id integer external(6),

first_name char(20),

last_name char(25),

email char(25),

phone_number char(20),

hire_date date "DD-MON-RR",

job_id char(10),

salary float external,

commission_pct float external,

manager_id integer external(6),

department_id integer external(4)

)

Finally, we create script to perform the load (load_employees.sh) :

#!/usr/bin/sh

/usr/bin/gpg \

--decrypt \

--pinentry-mode loopback \

--passphrase-file /opt/oracle/userhome/oracle/passphrase.txt \

--batch \

/home/app_user/upload/employees.csv.gpg 2>/dev/null|sqlldr \

control=load_employees.ctl \

userid=connect_string \

log=/home/app_user/logs/load_employees.log \

data=\'-\'

…replacing connect_string with the connect string for the schema you’re loading into.

Incidentally, in order to avoid having a database password hard-coded in the script, an Oracle Wallet would come in handy here.

Note that we tell SQL*Loader to read it’s STDIN for the data by specifying :

data =\'-\'

When we run this, we can see that it works as expected :

You could easily automate this load process by creating a DBMS_SCHEDULER external job.

Conclusion

As we’ve seen, it’s perfectly possible to pipe data from binary format files into the database without having to persist it in plain text. However, if you do decide to go down this path, you may want to wear your wellies.